Recording, notebook. All the material can also be found here.

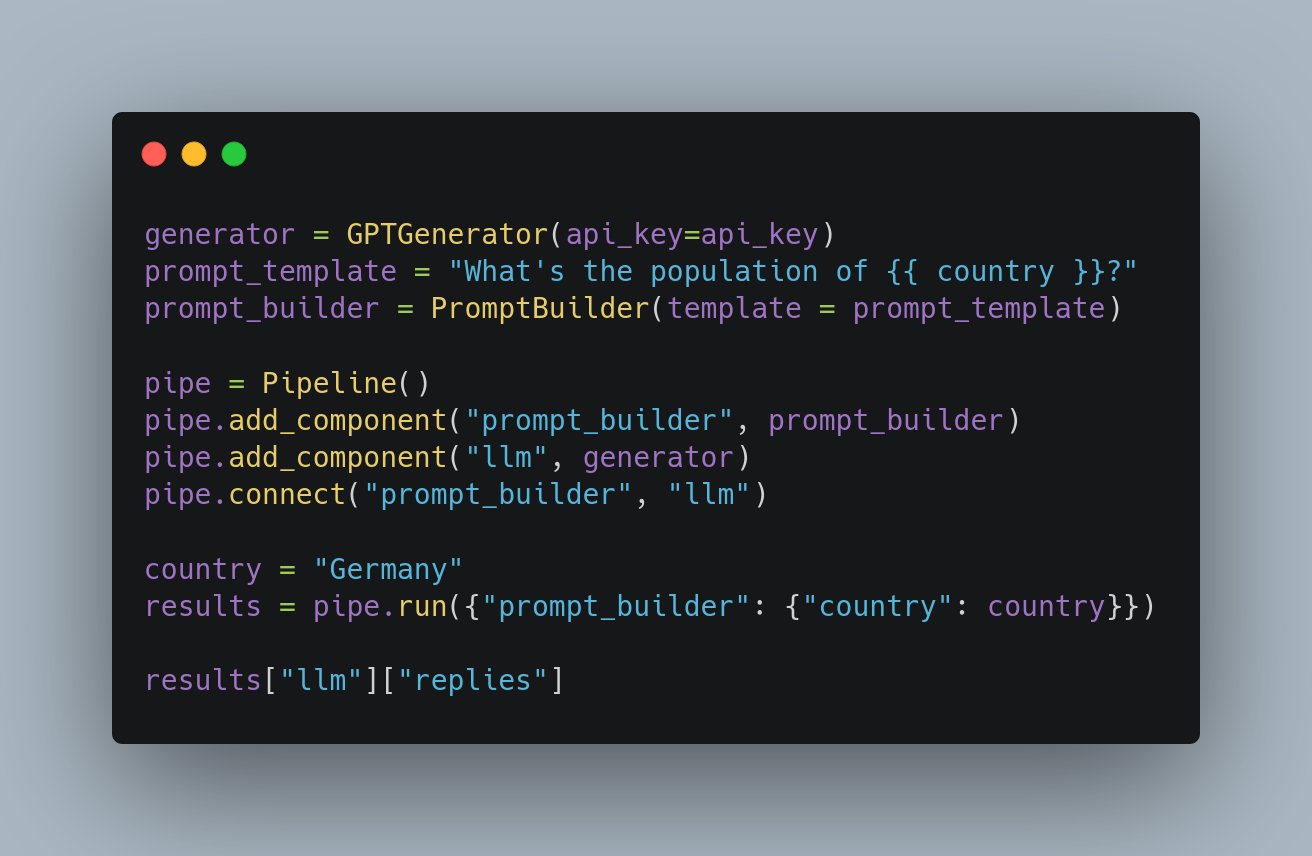

In this Office Hours I walk through the LLM support offered by Haystack 2.0 to this date: Generator, PromptBuilder, and how to connect them to different types of Retrievers to build Retrieval Augmented Generation (RAG) applications.

In under 40 minutes we start from a simple query to ChatGPT up to a full pipeline that retrieves documents from the Internet, splits them into chunks and feeds them to an LLM to ground its replies.

The talk indirectly shows also how Pipelines can help users compose these systems quickly, to visualize them, and helps them connect together different parts by producing verbose error messages.